Getting your site to rank on the first page of the search engine results page (SERP) is no easy feat. Search engines exist to discover, understand, and organize content uploaded to the internet to share with similarly interested users. It begins with how search engines recognize, index, and relate your page within their database. This article will cover the difference between search engines’ three steps to index your site, how to check your indexed versions, and what to look for when the site hasn’t been indexed.

What is site indexing?

Indexing is similar to a library index which lists information about all of the books (or websites) that the library (or search engine) has available (or knows about). This database is the reference that search engines use to determine what sites are most relevant to their user’s queries.

The difference between crawling and indexing

To relate your site to interested users, search engines go through three steps – crawling, indexing, and ranking. While the ranking process is ever-changing, updating, and impossible to pinpoint for a singular reason, you can use a few strategies to increase your ranking for 2023. The general breakdown of the three steps are

- Crawling: Search engines comb the internet for content, paying specific attention to the code and keywords for each URL they find.

- Indexing: Search engines store and organize the content found during crawling. Once a page is stored in the index, it’s available for display to relevant queries.

- Ranking: Search engines provide links or snippets of content that best answer a searcher’s query, listed by most relevant to least relevant.

Crawling

Search engines discover new or updated sites by sending out a team of robots (crawlers or spiders). Content can include text, images, videos, web pages, and more. Regardless of the content format, it is identified through links.

Google’s crawler, “Googlebot,” begins with a few web pages and follows the links on these pages to find new URLs. When Google visits your site, it detects new and updated pages and analyzes the content for meaning. These pages then update to the Google index.

Indexing

There is often a time disparity between your site being crawled and indexed. The more frequently you update or refresh your site, the more often search engines will crawl your site. It’s important to note that just because your site can be discovered and crawled, it doesn’t mean it will automatically be stored in the index.

How long does it take for search engines to index a site?

New websites can take anywhere from a few days to a few weeks to crawl. Indexing typically happens right after your site is crawled, but this is not guaranteed.Google’s Search Console (GSC) provides a free toolset that allows you to check your site’s presence on its search engine. With GSC, you can troubleshoot any issues with your site and request indexing.

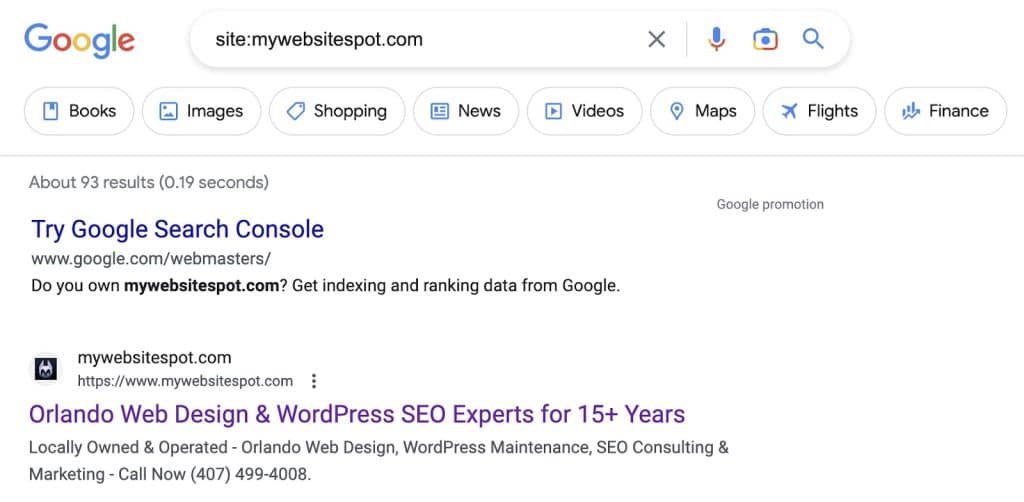

Check for indexing

There are a few ways to check if a search engine has indexed your site. The most popular way is to do a Google Web Search. To see which pages on your site are in the Google index, type “site:mywebsite.com” into the search bar.

You can see from this example that My Website Spot has 93 pages that Google has indexed. The number of results is not always accurate, but it gives you an idea of which pages have been indexed and can appear on a SERP. If you want more pages in the Google index, use the GSC to submit requests.

Indexing requests change the index for Google. Add the site or URL to the “Sites to search section,” found in the Basics tab of the Setup section in the search engine configuration. Telling search engines how to crawl your site can give you better control over what ends up in the index.

How does Googlebot see my pages?

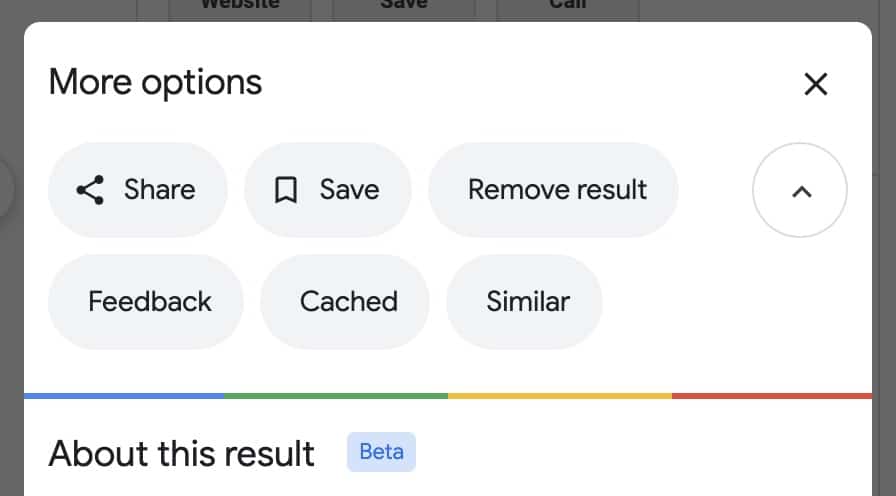

You can use your URL in the Google SERP to view what version of your pages has been cached. Click the drop-down arrow next to the URL and choose “Cached” to see a screenshot of cached results in SERPs. You can also view the text-only version of your site to determine if your important content is being crawled and cached effectively.

Tell search engines what to look for

Crawlers use links to discover pages within your site and from links pointing to your pages from other sites. Creating an XML sitemap (a file that lists all the ULS you want Google to index) helps crawlers find your main pages faster. You can find your sitemap by typing: “https://yourdomain.com/sitemap.xml.”

You can use GSC to help Google discover all of your important pages. Go to the “Sitemaps” board in GSC under the “Indexing” section in the left menu. Enter the URL of your sitemap and hit “Submit.” It will take a few days for your sitemap to be processed. Still, when it’s done, you will see a green “Success” status next to your sitemap link report.

What if my site isn’t indexing?

There are often pages that you don’t want search engines to find, like storing pages. While you may have any number of reasons for doing this, the content you want to be found by searchers and crawlers needs to be accessible and indexable. Commons reasons your pages are not showing up in the SERP are

- Your site is new and has not been crawled or indexed yet.

- Your page violates search engine guidelines

- You have duplicate content

- Content that is identical or very similar to content found on other pages.

- There are malware or security issues

- If a website contains malware or security issues, its URL may be removed from the search engine index to protect users from being exposed to these threats.

- The crawlers detected broken links or error pages

- If a website’s pages are frequently broken or return error messages (like “not found” 4XX errors or server error 5XX), its URL may be removed from the search engine index.

- Inactivity

- If a website is not frequently updated with new content or if it goes inactive for a prolonged period, then its URL may be removed from the search engine index.

Keep your site relevant

My Website Spot has guided businesses in all industries through the Google indexing and ranking landscape since 2005. Our experts are trained in various areas of SEO and have over 20 years of combined experience and finesse in all things Google. We are always willing to provide free advice and help you get your pages noticed by search engines and searchers alike. Drop us a line, and we’ll help you navigate your way to higher rankings and greater visibility.